"Allo is a smart messaging app that makes your conversations easier and more expressive. It's based on your phone number, so you can get in touch with anyone in your phonebook. And with deeply integrated machine learning, Allo has smart features to keep your conversations flowing and help you get things done," said a Google spokesperson.

However, WhatsApp is a behemoth. And not only WhatsApp but also chat apps like WeChat and the iMessage have a huge number of users, enough to make it difficult for a new entrant to gain ground, even if that new player happens to be a Google app. The company probably knows this. Hence it has added some unique and the (probably) cool features to Allo. These are:

1- Allo has Smart Reply feature. This will kind of let you reply to a message automatically, similar to the auto reply function of Google Inbox. "Smart Reply learns over time and will show suggestions that are in your style. For example, it will learn whether you're more of a 'haha' vs 'lol' kind of person. The more you use Allo the more 'you' the suggestions will become," notes Google. It also analyses the content to offer suggestions. So, for example, if you get a message with burger picture in it, the Allo may suggest replies like "Yummy" or "tasty".

2- Allo has Google Assistant built inside it. This will let a user do Google searches without leaving the app. This is more or less similar to what Google has built inside Gboard, the keyboard app for the iPhone that the company launched a few days ago.

3- Users will also be able to chat with Google Assistant inside Allo. "And since it understands natural language patterns, you can just chat like yourself and it'll understand what you're saying. For example, 'Is my flight delayed?' will return information about your flight status," notes Google.

4- Allo has Incognito Mode. "Chats in Incognito mode will have end-to-end encryption and discreet notifications," says Google.

While this all sounds interesting the biggest problem that Allo is going to face is that people already have a chat app that works best for them and their friends. And this app is WhatsApp. The Facebook-owned chat app has the critical mass to keep rolling. Everyone is on WhatsApp and hence even if Allo is good people may not opt for it because their friends are on a different chat.

Allo will be available for both Android and iOS.

How does it work?

About a year ago, we started exploring how we can make communication easier and more fun. The idea of Smart Reply for Allo came up in a brainstorming session with my teammates Sushant Prakash and Ori Gershony who then helped me lead our team to build this technology. We began by experimenting with neural network based model architectures which had proven to be successful for sequence prediction, including the encoder-decoder model used in Smart Reply for Inbox.

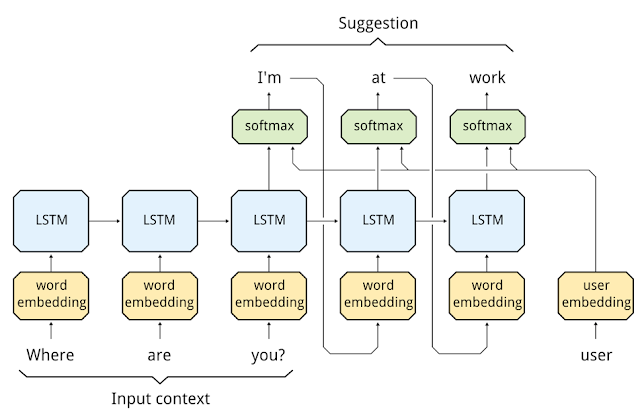

Each semantic class is associated with a set of possible messages that belong to it. We use a second recurrent network to generate a specific message from that set. This network also converts the context into a hidden LSTM state but this time the hidden state is used to generate the full message of the reply one token at a time. For example, now the LSTM after seeing the context “Where are you?” generates the tokens in the response: “I’m at work”.

As with any large-scale product, there were several engineering challenges we had to solve in generating a set of high-quality responses efficiently. For example, in spite of the two staged architecture, our first few networks were very slow and required about half a second to generate a response. This was obviously a deal breaker when we are talking about real time communication apps! So we had to evolve our neural network architecture further to reduce the latency to less than 200ms. We moved from using a softmax layer to a hierarchical softmax layer which traverses a tree of words instead of traversing a list of words thus making it more efficient.

Another interesting challenge we had to solve when generating predictions is controlling for message length. Sometimes none of the most probable responses are appropriate - if the model predicts too short a message, it might not be useful to the user, and if we predict something too long, it might not fit on the phone screen. We solved this by biasing the beam search to follow paths that lead to higher utility responses instead of favoring just the responses that are most probable. That way, we can efficiently generate appropriate length response predictions that are useful to our users.

Personalized for you

The best part about these suggestions is that over time they are personalized to you so that your individual style is reflected in your conversations. For example, if you often reply to “How are you?” with “Fine.” instead of “I am good.”, it will learn your preference and your future suggestions will take that into account. This was accomplished by incorporating a user's "style" as one of the features in a Neural Network that is used to predict the next word in a response, resulting in suggestions that are customized for your personality and individual preferences. The user's style is captured in a sequence of numbers that we call the user embedding. These embeddings can be generated as part of the regular model training, but this approach requires waiting for many days for training to be complete and it cannot handle more than a handful of millions of users. To solve this issue, Alon Shafrir implemented a L-BFGS based technique to generate user embeddings quickly and at scale. Now, you'll be able to enjoy personalized suggestions after only a short time of using Allo.

More than just English

The neural network model described above is language agnostic so building separate prediction models for each language works quite well. To make sure that responses for each language benefit from our semantic understanding of other languages, Sujith Ravi came up with a graph-based machine learning technique that can connect possible responses across languages. Dana Movshovitz-Attias and Peter Young applied this technique to build a graph that connects responses to incoming messages and to other responses that have similar word embeddings and syntactic relationships. It also connects responses with similar meaning across languages based on the machine translation models developed by our Translate team.

With this graph, we use semi-supervised learning, as described in this paper, to learn the semantic meaning of responses and determine which are the most useful clusters of possible responses. As a result, we can allow the LSTM to score many possible variants of each possible response meaning, allowing the personalization routines to select the best response for the user in the context of the conversation. This also helps enforce diversity as we can now pick the final set of responses from different semantic clusters.

Here’s an example of how the graph might look for a set of messages related to greetings:

Beyond Smart Reply

I am also very excited about the Google assistant in Allo with which you can converse and get information about anything that Google Search knows about. It understands your sentences and helps you accomplish tasks directly from the conversation. For example, the Google assistant can help you discover a restaurant and reserve a table from within the Allo app when chatting with your friends. This has been made possible because of the cutting-edge research in natural language understanding that we have been doing at Google. More details to follow soon!

These smart features will be part of the Android and iOS apps for Allo that will be available later this summer. We can’t wait for you to try and enjoy it!

#Happy techtrend

#Hackonics tech trend

#Happy Hackonics

#Happy chat with Allo!

#Sharing is Good!

No comments:

Post a Comment